实验室流层 (LSL) 用于同步多个数据流

罗希尼·兰德尼亚

2025年10月1日

分享:

由 Roshini Randeniya 和 Lucas Kleine 撰写

操作:

在命令行中运行后,此脚本立即启动 LSL 流。每当按下 'Enter' 键时,它会发送一个触发器并播放音频文件。"""

import sounddevice as sd

import soundfile as sf

from pylsl import StreamInfo, StreamOutlet

def wait_for_keypress():

print("按 ENTER 开始音频播放并发送 LSL 标记。")

while True: # This loop waits for a keyboard input input_str = input() # Wait for input from the terminal if input_str == "": # If the enter key is pressed, proceed break

def AudioMarker(audio_file, outlet): # 播放音频并发送标记的函数

data, fs = sf.read(audio_file) # 加载音频文件

print("Playing audio and sending LSL marker...") marker_val = [1] outlet.push_sample(marker_val) # Send marker indicating the start of audio playback sd.play(data, fs) # play the audio sd.wait() # Wait until audio is done playing print("Audio playback finished.")

if name == "main": # 主循环

# 设置 LSL 流用于标记

stream_name = 'AudioMarkers'

stream_type = 'Markers'

n_chans = 1

sr = 0 # 设置为 0 采样率,因为标记不规则

chan_format = 'int32'

marker_id = 'uniqueMarkerID12345'

info = StreamInfo(stream_name, stream_type, n_chans, sr, chan_format, marker_id) outlet = StreamOutlet(info) # create LSL outlet # Keep the script running and wait for ENTER key to play audio and send marker while True: wait_for_keypress() audio_filepath = "/path/to/your/audio_file.wav" # replace with correct path to your audio file AudioMarker(audio_filepath, outlet) # After playing audio and sending a marker, the script goes back to waiting for the next keypress</code></pre><p><em><strong>**By running this file (even before playing the audio), you've initiated an LSL stream through an outlet</strong></em><strong>. Now we'll view that stream in LabRecorder</strong></p><p><strong>STEP 5 - Use LabRecorder to view and save all LSL streams</strong></p><ol><li data-preset-tag="p"><p>Open LabRecorder</p></li><li data-preset-tag="p"><p>Press <em><strong>Update</strong></em>. The available LSL streams should be visible in the stream list<br> • You should be able to see streams from both EmotivPROs (usually called "EmotivDataStream") and the marker stream (called "AudioMarkers")</p></li><li data-preset-tag="p"><p>Click <em><strong>Browse</strong></em> to select a location to store data (and set other parameters)</p></li><li data-preset-tag="p"><p>Select all streams and press <em><strong>Record</strong></em> to start recording</p></li><li data-preset-tag="p"><p>Click Stop when you want to end the recording</p></li></ol><p><br></p><img alt="" src="https://framerusercontent.com/images/HFGuJF9ErVu2Jxrgtqt11tl0No.jpg"><h2><strong>Working with the data</strong></h2><p><strong>LabRecorder outputs an XDF file (Extensible Data Format) that contains data from all the streams. XDF files are structured into, </strong><em><strong>streams</strong></em><strong>, each with a different </strong><em><strong>header</strong></em><strong> that describes what it contains (device name, data type, sampling rate, channels, and more). You can use the below codeblock to open your XDF file and display some basic information.</strong></p><pre data-language="JSX"><code>

**运行此文件(即使在播放音频之前),您已通过 outlet 启动了 LSL 流。现在我们将在 LabRecorder 中查看该流。

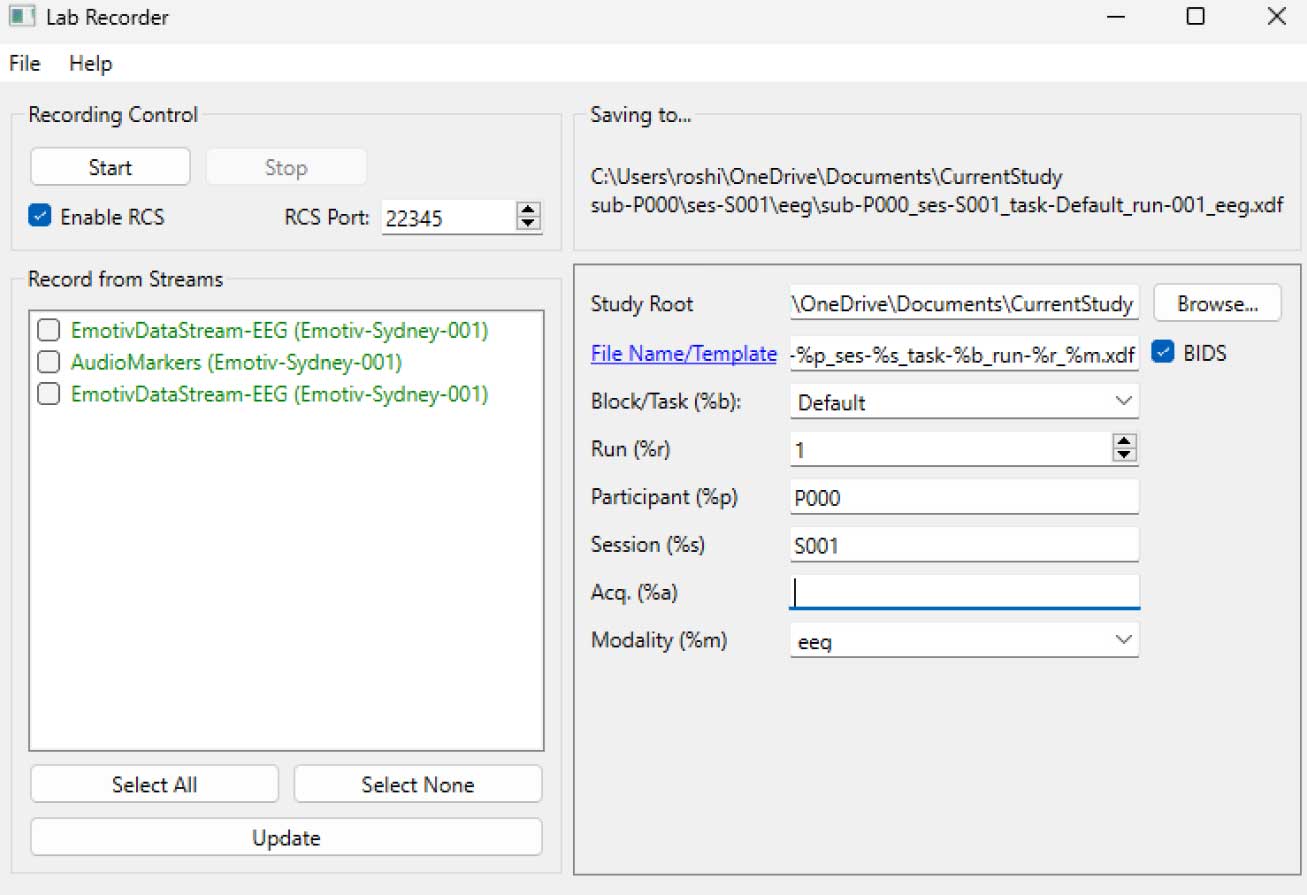

步骤 5 - 使用 LabRecorder 查看和保存所有 LSL 流

打开 LabRecorder

按 更新。可用的 LSL 流应该在流列表中可见

• 您应该能看到来自两个 EmotivPRO 的流(通常称为 "EmotivDataStream")以及标记流(称为 "AudioMarkers")点击 浏览 选择保存数据的位置(并设置其他参数)

选择所有流并按 记录 开始录制

点击停止以结束录制

处理数据

LabRecorder 输出一个 XDF 文件(可扩展数据格式),包含来自所有流的数据。XDF 文件被结构化为,流,每个流都有不同的头部,描述其包含的内容(设备名称、数据类型、采样率、通道等)。您可以使用下面的代码块打开您的 XDF 文件并显示一些基本信息。

此示例脚本演示了一些基本功能,用于导入和注释从 EmotivPRO 软件收集的 EEG 数据。它使用 MNE 加载 XDF 文件,打印一些基本元数据,创建一个 info 对象并绘制功率谱图。"""

import pyxdf

import mne

import matplotlib.pyplot as plt

import numpy as np

您的 XDF 文件路径

data_path = '/path/to/your/xdf_file.xdf'

加载 XDF 文件

streams, fileheader = pyxdf.load_xdf(data_path)

print("XDF 文件头:", fileheader)

print("找到的流数量:", len(streams))

for i, stream in enumerate(streams):

print("\n流", i + 1)

print("流名称:", stream['info']['name'][0])

print("流类型:", stream['info']['type'][0])

print("通道数量:", stream['info']['channel_count'][0])

sfreq = float(stream['info']['nominal_srate'][0])

print("采样率:", sfreq)

print("样本数量:", len(stream['time_series']))

print("打印前 5 个数据点:", stream['time_series'][:5])

channel_names = [chan['label'][0] for chan in stream['info']['desc'][0]['channels'][0]['channel']] print("Channel Names:", channel_names) channel_types = 'eeg'

创建 MNE info 对象

info = mne.create_info(channel_names, sfreq, channel_types)

data = np.array(stream['time_series']).T # 数据需要转置:通道 x 样本

raw = mne.io.RawArray(data, info)

raw.plot_psd(fmax=50) # 绘制简单的谱图(功率谱密度)

附加资源从 EMOTIV GitHub 下载该教程作为 Jupyter 笔记本查阅 LSL 在线文档,包括 GitHub 上的官方 README 文件您将需要一个或多个受支持的数据采集设备来收集数据所有 EMOTIV 的脑电设备都连接到具有 LSL 内置功能的 EmotivPRO 软件,用于发送和接收数据流附加资源:使用 Emotiv 设备运行 LSL 的代码,示例脚本YouTube 上的 LSL 演示SCCN LSL GitHub 存储库中的所有关联库用于子模块和应用程序的 GitHub 存储库HyPyP 分析管道用于超扫描研究

由 Roshini Randeniya 和 Lucas Kleine 撰写

操作:

在命令行中运行后,此脚本立即启动 LSL 流。每当按下 'Enter' 键时,它会发送一个触发器并播放音频文件。"""

import sounddevice as sd

import soundfile as sf

from pylsl import StreamInfo, StreamOutlet

def wait_for_keypress():

print("按 ENTER 开始音频播放并发送 LSL 标记。")

while True: # This loop waits for a keyboard input input_str = input() # Wait for input from the terminal if input_str == "": # If the enter key is pressed, proceed break

def AudioMarker(audio_file, outlet): # 播放音频并发送标记的函数

data, fs = sf.read(audio_file) # 加载音频文件

print("Playing audio and sending LSL marker...") marker_val = [1] outlet.push_sample(marker_val) # Send marker indicating the start of audio playback sd.play(data, fs) # play the audio sd.wait() # Wait until audio is done playing print("Audio playback finished.")

if name == "main": # 主循环

# 设置 LSL 流用于标记

stream_name = 'AudioMarkers'

stream_type = 'Markers'

n_chans = 1

sr = 0 # 设置为 0 采样率,因为标记不规则

chan_format = 'int32'

marker_id = 'uniqueMarkerID12345'

info = StreamInfo(stream_name, stream_type, n_chans, sr, chan_format, marker_id) outlet = StreamOutlet(info) # create LSL outlet # Keep the script running and wait for ENTER key to play audio and send marker while True: wait_for_keypress() audio_filepath = "/path/to/your/audio_file.wav" # replace with correct path to your audio file AudioMarker(audio_filepath, outlet) # After playing audio and sending a marker, the script goes back to waiting for the next keypress</code></pre><p><em><strong>**By running this file (even before playing the audio), you've initiated an LSL stream through an outlet</strong></em><strong>. Now we'll view that stream in LabRecorder</strong></p><p><strong>STEP 5 - Use LabRecorder to view and save all LSL streams</strong></p><ol><li data-preset-tag="p"><p>Open LabRecorder</p></li><li data-preset-tag="p"><p>Press <em><strong>Update</strong></em>. The available LSL streams should be visible in the stream list<br> • You should be able to see streams from both EmotivPROs (usually called "EmotivDataStream") and the marker stream (called "AudioMarkers")</p></li><li data-preset-tag="p"><p>Click <em><strong>Browse</strong></em> to select a location to store data (and set other parameters)</p></li><li data-preset-tag="p"><p>Select all streams and press <em><strong>Record</strong></em> to start recording</p></li><li data-preset-tag="p"><p>Click Stop when you want to end the recording</p></li></ol><p><br></p><img alt="" src="https://framerusercontent.com/images/HFGuJF9ErVu2Jxrgtqt11tl0No.jpg"><h2><strong>Working with the data</strong></h2><p><strong>LabRecorder outputs an XDF file (Extensible Data Format) that contains data from all the streams. XDF files are structured into, </strong><em><strong>streams</strong></em><strong>, each with a different </strong><em><strong>header</strong></em><strong> that describes what it contains (device name, data type, sampling rate, channels, and more). You can use the below codeblock to open your XDF file and display some basic information.</strong></p><pre data-language="JSX"><code>

**运行此文件(即使在播放音频之前),您已通过 outlet 启动了 LSL 流。现在我们将在 LabRecorder 中查看该流。

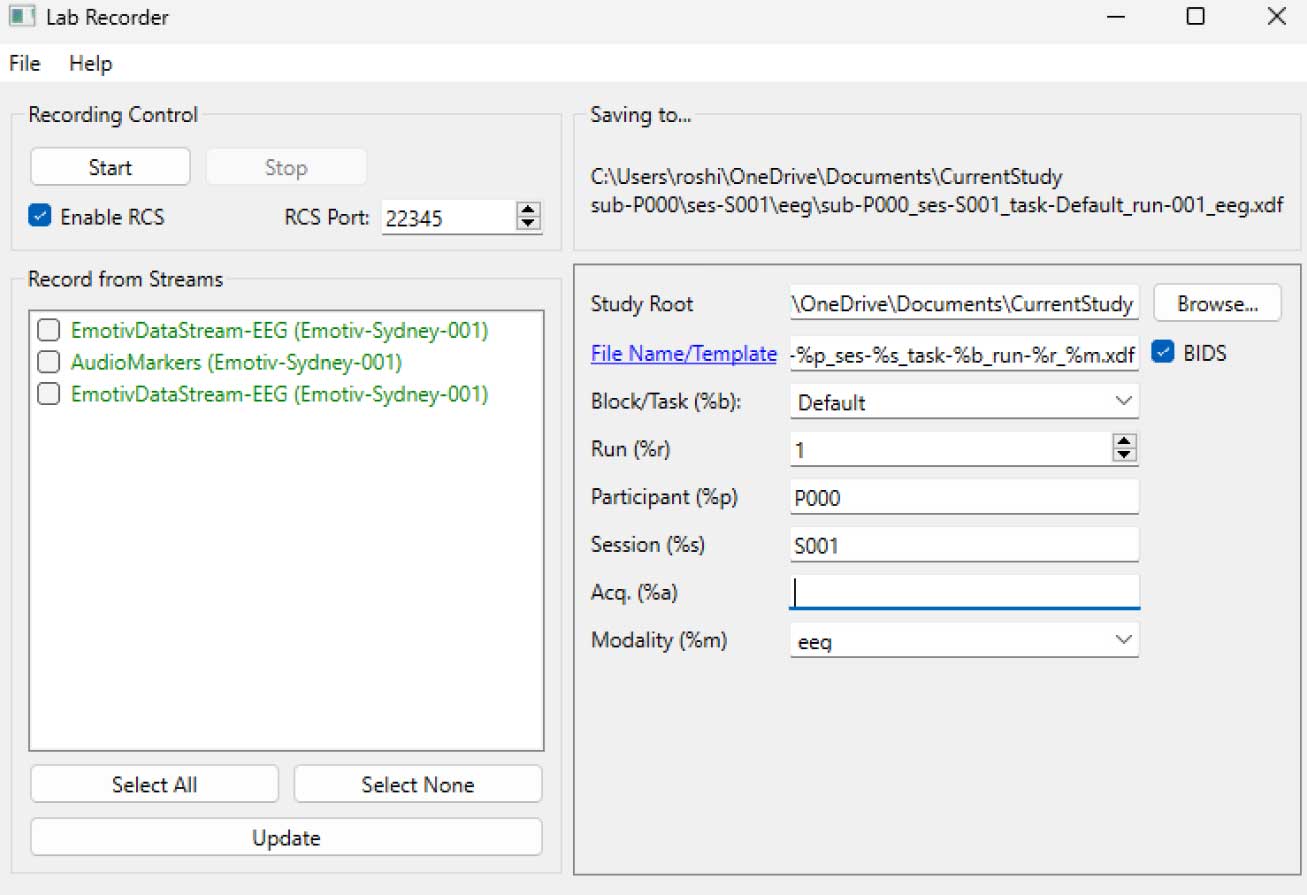

步骤 5 - 使用 LabRecorder 查看和保存所有 LSL 流

打开 LabRecorder

按 更新。可用的 LSL 流应该在流列表中可见

• 您应该能看到来自两个 EmotivPRO 的流(通常称为 "EmotivDataStream")以及标记流(称为 "AudioMarkers")点击 浏览 选择保存数据的位置(并设置其他参数)

选择所有流并按 记录 开始录制

点击停止以结束录制

处理数据

LabRecorder 输出一个 XDF 文件(可扩展数据格式),包含来自所有流的数据。XDF 文件被结构化为,流,每个流都有不同的头部,描述其包含的内容(设备名称、数据类型、采样率、通道等)。您可以使用下面的代码块打开您的 XDF 文件并显示一些基本信息。

此示例脚本演示了一些基本功能,用于导入和注释从 EmotivPRO 软件收集的 EEG 数据。它使用 MNE 加载 XDF 文件,打印一些基本元数据,创建一个 info 对象并绘制功率谱图。"""

import pyxdf

import mne

import matplotlib.pyplot as plt

import numpy as np

您的 XDF 文件路径

data_path = '/path/to/your/xdf_file.xdf'

加载 XDF 文件

streams, fileheader = pyxdf.load_xdf(data_path)

print("XDF 文件头:", fileheader)

print("找到的流数量:", len(streams))

for i, stream in enumerate(streams):

print("\n流", i + 1)

print("流名称:", stream['info']['name'][0])

print("流类型:", stream['info']['type'][0])

print("通道数量:", stream['info']['channel_count'][0])

sfreq = float(stream['info']['nominal_srate'][0])

print("采样率:", sfreq)

print("样本数量:", len(stream['time_series']))

print("打印前 5 个数据点:", stream['time_series'][:5])

channel_names = [chan['label'][0] for chan in stream['info']['desc'][0]['channels'][0]['channel']] print("Channel Names:", channel_names) channel_types = 'eeg'

创建 MNE info 对象

info = mne.create_info(channel_names, sfreq, channel_types)

data = np.array(stream['time_series']).T # 数据需要转置:通道 x 样本

raw = mne.io.RawArray(data, info)

raw.plot_psd(fmax=50) # 绘制简单的谱图(功率谱密度)

附加资源从 EMOTIV GitHub 下载该教程作为 Jupyter 笔记本查阅 LSL 在线文档,包括 GitHub 上的官方 README 文件您将需要一个或多个受支持的数据采集设备来收集数据所有 EMOTIV 的脑电设备都连接到具有 LSL 内置功能的 EmotivPRO 软件,用于发送和接收数据流附加资源:使用 Emotiv 设备运行 LSL 的代码,示例脚本YouTube 上的 LSL 演示SCCN LSL GitHub 存储库中的所有关联库用于子模块和应用程序的 GitHub 存储库HyPyP 分析管道用于超扫描研究

由 Roshini Randeniya 和 Lucas Kleine 撰写

操作:

在命令行中运行后,此脚本立即启动 LSL 流。每当按下 'Enter' 键时,它会发送一个触发器并播放音频文件。"""

import sounddevice as sd

import soundfile as sf

from pylsl import StreamInfo, StreamOutlet

def wait_for_keypress():

print("按 ENTER 开始音频播放并发送 LSL 标记。")

while True: # This loop waits for a keyboard input input_str = input() # Wait for input from the terminal if input_str == "": # If the enter key is pressed, proceed break

def AudioMarker(audio_file, outlet): # 播放音频并发送标记的函数

data, fs = sf.read(audio_file) # 加载音频文件

print("Playing audio and sending LSL marker...") marker_val = [1] outlet.push_sample(marker_val) # Send marker indicating the start of audio playback sd.play(data, fs) # play the audio sd.wait() # Wait until audio is done playing print("Audio playback finished.")

if name == "main": # 主循环

# 设置 LSL 流用于标记

stream_name = 'AudioMarkers'

stream_type = 'Markers'

n_chans = 1

sr = 0 # 设置为 0 采样率,因为标记不规则

chan_format = 'int32'

marker_id = 'uniqueMarkerID12345'

info = StreamInfo(stream_name, stream_type, n_chans, sr, chan_format, marker_id) outlet = StreamOutlet(info) # create LSL outlet # Keep the script running and wait for ENTER key to play audio and send marker while True: wait_for_keypress() audio_filepath = "/path/to/your/audio_file.wav" # replace with correct path to your audio file AudioMarker(audio_filepath, outlet) # After playing audio and sending a marker, the script goes back to waiting for the next keypress</code></pre><p><em><strong>**By running this file (even before playing the audio), you've initiated an LSL stream through an outlet</strong></em><strong>. Now we'll view that stream in LabRecorder</strong></p><p><strong>STEP 5 - Use LabRecorder to view and save all LSL streams</strong></p><ol><li data-preset-tag="p"><p>Open LabRecorder</p></li><li data-preset-tag="p"><p>Press <em><strong>Update</strong></em>. The available LSL streams should be visible in the stream list<br> • You should be able to see streams from both EmotivPROs (usually called "EmotivDataStream") and the marker stream (called "AudioMarkers")</p></li><li data-preset-tag="p"><p>Click <em><strong>Browse</strong></em> to select a location to store data (and set other parameters)</p></li><li data-preset-tag="p"><p>Select all streams and press <em><strong>Record</strong></em> to start recording</p></li><li data-preset-tag="p"><p>Click Stop when you want to end the recording</p></li></ol><p><br></p><img alt="" src="https://framerusercontent.com/images/HFGuJF9ErVu2Jxrgtqt11tl0No.jpg"><h2><strong>Working with the data</strong></h2><p><strong>LabRecorder outputs an XDF file (Extensible Data Format) that contains data from all the streams. XDF files are structured into, </strong><em><strong>streams</strong></em><strong>, each with a different </strong><em><strong>header</strong></em><strong> that describes what it contains (device name, data type, sampling rate, channels, and more). You can use the below codeblock to open your XDF file and display some basic information.</strong></p><pre data-language="JSX"><code>

**运行此文件(即使在播放音频之前),您已通过 outlet 启动了 LSL 流。现在我们将在 LabRecorder 中查看该流。

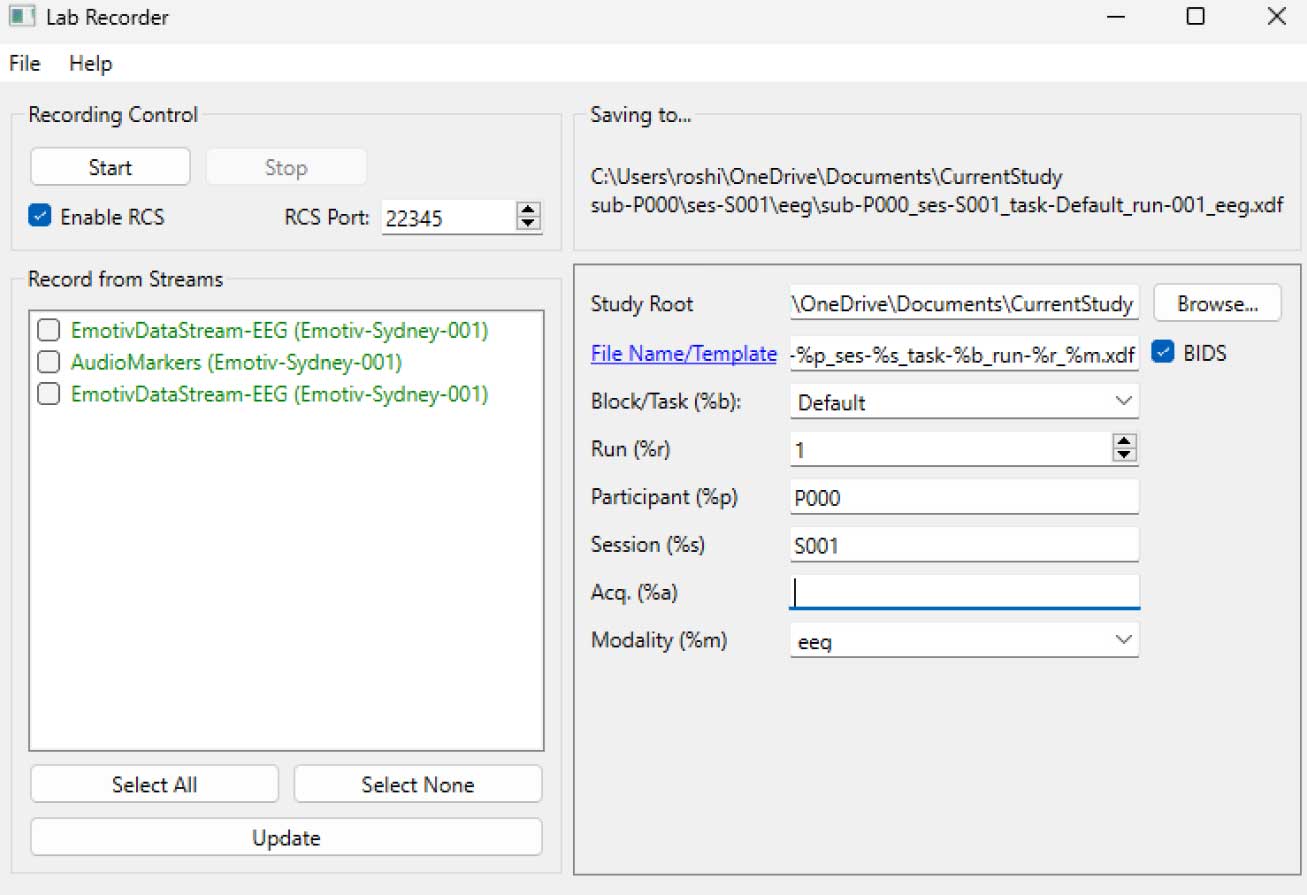

步骤 5 - 使用 LabRecorder 查看和保存所有 LSL 流

打开 LabRecorder

按 更新。可用的 LSL 流应该在流列表中可见

• 您应该能看到来自两个 EmotivPRO 的流(通常称为 "EmotivDataStream")以及标记流(称为 "AudioMarkers")点击 浏览 选择保存数据的位置(并设置其他参数)

选择所有流并按 记录 开始录制

点击停止以结束录制

处理数据

LabRecorder 输出一个 XDF 文件(可扩展数据格式),包含来自所有流的数据。XDF 文件被结构化为,流,每个流都有不同的头部,描述其包含的内容(设备名称、数据类型、采样率、通道等)。您可以使用下面的代码块打开您的 XDF 文件并显示一些基本信息。

此示例脚本演示了一些基本功能,用于导入和注释从 EmotivPRO 软件收集的 EEG 数据。它使用 MNE 加载 XDF 文件,打印一些基本元数据,创建一个 info 对象并绘制功率谱图。"""

import pyxdf

import mne

import matplotlib.pyplot as plt

import numpy as np

您的 XDF 文件路径

data_path = '/path/to/your/xdf_file.xdf'

加载 XDF 文件

streams, fileheader = pyxdf.load_xdf(data_path)

print("XDF 文件头:", fileheader)

print("找到的流数量:", len(streams))

for i, stream in enumerate(streams):

print("\n流", i + 1)

print("流名称:", stream['info']['name'][0])

print("流类型:", stream['info']['type'][0])

print("通道数量:", stream['info']['channel_count'][0])

sfreq = float(stream['info']['nominal_srate'][0])

print("采样率:", sfreq)

print("样本数量:", len(stream['time_series']))

print("打印前 5 个数据点:", stream['time_series'][:5])

channel_names = [chan['label'][0] for chan in stream['info']['desc'][0]['channels'][0]['channel']] print("Channel Names:", channel_names) channel_types = 'eeg'

创建 MNE info 对象

info = mne.create_info(channel_names, sfreq, channel_types)

data = np.array(stream['time_series']).T # 数据需要转置:通道 x 样本

raw = mne.io.RawArray(data, info)

raw.plot_psd(fmax=50) # 绘制简单的谱图(功率谱密度)

附加资源从 EMOTIV GitHub 下载该教程作为 Jupyter 笔记本查阅 LSL 在线文档,包括 GitHub 上的官方 README 文件您将需要一个或多个受支持的数据采集设备来收集数据所有 EMOTIV 的脑电设备都连接到具有 LSL 内置功能的 EmotivPRO 软件,用于发送和接收数据流附加资源:使用 Emotiv 设备运行 LSL 的代码,示例脚本YouTube 上的 LSL 演示SCCN LSL GitHub 存储库中的所有关联库用于子模块和应用程序的 GitHub 存储库HyPyP 分析管道用于超扫描研究